前言

pyquery是一個(gè)類似jquery的python庫(kù),它實(shí)現(xiàn)能夠在xml文檔中進(jìn)行jQuery查詢,pyquery使用lxml解析器進(jìn)行快速在xml和html文檔上操作,它提供了和jQuery類似的語(yǔ)法來(lái)解析HTML文檔,支持CSS選擇器,使用非常方便

1、pyquery安裝

pip方式安裝:

|

1

2

3

4

5

6

|

$pip install pyquery#它依賴cssselect和lxml包pyquery==1.4.0 - cssselect [required: >0.7.9, installed: 1.0.3] #CSS選擇器并將它轉(zhuǎn)換為XPath表達(dá)式 |

驗(yàn)證安裝:

|

1

2

3

4

|

In [1]: import pyqueryIn [2]: pyquery.text |

2、pyquery對(duì)象初始化

pyquery首先需要傳入HTML文本來(lái)初始化一個(gè)pyquery對(duì)象,它的初始化方式有多種,如直接傳入字符串,傳入U(xiǎn)RL或者傳入文件名

(1)字符串初始化

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

from pyquery import PyQuery as pqhtml='''<div id="wenzhangziti" class="article 389862"><p>人生是一條沒有盡頭的路,不要留戀逝去的夢(mèng),把命運(yùn)掌握在自己手中,讓我們來(lái)掌握自己的命運(yùn),別讓別人的干擾與誘惑,別讓功名與利祿,來(lái)打翻我們這壇陳釀已久的命運(yùn)之酒!</p></div>'''doc=pq(html) #初始化并創(chuàng)建pyquery對(duì)象print(type(doc))print(doc('p').text())#<class 'pyquery.pyquery.PyQuery'>人生是一條沒有盡頭的路,不要留戀逝去的夢(mèng),把命運(yùn)掌握在自己手中,讓我們來(lái)掌握自己的命運(yùn),別讓別人的干擾與誘惑,別讓功名與利祿,來(lái)打翻我們這壇陳釀已久的命運(yùn)之酒! |

(2)URL初始化

|

1

2

3

4

5

6

7

8

9

|

from pyquery import PyQuery as pqdoc=pq(url='https://www.cnblogs.com/zhangxinqi/p/9218395.html')print(type(doc))print(doc('title'))#<class 'pyquery.pyquery.PyQuery'><title>python3解析庫(kù)BeautifulSoup4 - Py.qi - 博客園</title> |

PyQuery能夠從url加載一個(gè)html文檔,之際上是默認(rèn)情況下調(diào)用python的urllib庫(kù)去請(qǐng)求響應(yīng),如果requests已安裝的話它將使用requests來(lái)請(qǐng)求響應(yīng),那我們就可以使用request的請(qǐng)求參數(shù)來(lái)構(gòu)造請(qǐng)求了,實(shí)際請(qǐng)求如下:

|

1

2

3

4

5

6

7

8

9

10

|

from pyquery import PyQuery as pqimport requestsdoc=pq(requests.get(url='https://www.cnblogs.com/zhangxinqi/p/9218395.html').text)print(type(doc))print(doc('title'))#輸出同上一樣<class 'pyquery.pyquery.PyQuery'><title>python3解析庫(kù)BeautifulSoup4 - Py.qi - 博客園</title> |

(3)通過(guò)文件初始化

通過(guò)本地的HTML文件來(lái)構(gòu)造PyQuery對(duì)象

|

1

2

3

4

5

6

|

from pyquery import PyQuery as pqdoc=pq(filename='demo.html',parser='html')#doc=pq(open('demo.html','r',encoding='utf-8').read(),parser='html') #注意:在讀取有中文的HTML文件時(shí),請(qǐng)使用此方法,否則會(huì)報(bào)解碼錯(cuò)誤print(type(doc))print(doc('p')) |

3、CSS選擇器

在使用屬性選擇器中,使用屬性選擇特定的標(biāo)簽,標(biāo)簽和CSS標(biāo)識(shí)必須引用為字符串,它會(huì)過(guò)濾篩選符合條件的節(jié)點(diǎn)打印輸出,返回的是一個(gè)PyQuery類型對(duì)象

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

from pyquery import PyQuery as pqimport requestshtml='''<div id="container"> <ul class="list"> <li class="item-0">first item</li> <li class="item-1"><a href="link2.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >second item</a></li> <li class="item-0 active"><a href="link3.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" ><span class="bold">third item</span></a></li> <li class="item-1 active"><a href="link4.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >fourth item</a></li> <li class="item-0"><a href="link5.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >fifth item</a></li> </ul> </div>'''doc=pq(html,parser='html')print(doc('#container .list .item-0 a'))print(doc('.list .item-1'))#<a href="link3.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" ><span class="bold">third item</span></a><a href="link5.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >fifth item</a><li class="item-1"><a href="link2.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >second item</a></li> <li class="item-1 active"><a href="link4.html" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" rel="external nofollow" >fourth item</a></li> |

4、查找節(jié)點(diǎn)

PyQuery使用查詢函數(shù)來(lái)查詢節(jié)點(diǎn),同jQuery中的函數(shù)用法完全相同

(1)查找子節(jié)點(diǎn)和子孫節(jié)點(diǎn)

使用find()方法獲取子孫節(jié)點(diǎn),children()獲取子節(jié)點(diǎn),使用以上的HTML代碼測(cè)試

|

1

2

3

4

5

6

|

from pyquery import PyQuery as pqimport requestsdoc=pq(html,parser='html')print('find:',doc.find('a'))print('children:',doc('li').children('a')) |

(2)獲取父節(jié)點(diǎn)和祖先節(jié)點(diǎn)

parent()方法獲取父節(jié)點(diǎn),parents()獲取祖先節(jié)點(diǎn)

|

1

2

|

doc(.list).parent()doc(.list).parents() |

(3)獲取兄弟節(jié)點(diǎn)

siblings()方法用來(lái)獲取兄弟節(jié)點(diǎn),可以嵌套使用,傳入CSS選擇器即可繼續(xù)匹配

|

1

|

doc('.list .item-0 .active').siblings('.active') |

5、遍歷

對(duì)于pyquery的選擇結(jié)果可能是多個(gè)字節(jié),也可能是單個(gè)節(jié)點(diǎn),類型都是PyQuery類型,它沒有返回列表等形式,對(duì)于當(dāng)個(gè)節(jié)點(diǎn)我們可指直接打印輸出或者直接轉(zhuǎn)換成字符串,而對(duì)于多個(gè)節(jié)點(diǎn)的結(jié)果,我們需要遍歷來(lái)獲取所有節(jié)點(diǎn)可以使用items()方法,它會(huì)返回一個(gè)生成器,循環(huán)得到的每個(gè)節(jié)點(diǎn)類型依然是PyQuery類型,所以我們可以繼續(xù)方法來(lái)選擇節(jié)點(diǎn)或?qū)傩裕瑑?nèi)容等

|

1

2

3

|

lis=doc('li').items()for i in lis: print(i('a')) #繼續(xù)獲取節(jié)點(diǎn)下的子節(jié)點(diǎn) |

6、獲取信息

attr()方法用來(lái)獲取屬性,如返回的結(jié)果有多個(gè)時(shí)可以調(diào)用items()方法來(lái)遍歷獲取

|

1

|

doc('.item-0.active a').attr('href') #多屬性值中間不能有空格 |

text()方法用來(lái)獲取文本內(nèi)容,它只返回內(nèi)部的文本信息不包括HTML文本內(nèi)容,如果想返回包括HTML的文本內(nèi)容可以使用html()方法,如果結(jié)果有多個(gè),text()方法會(huì)方法所有節(jié)點(diǎn)的文本信息內(nèi)容并將它們拼接用空格分開返回字符串內(nèi)容,html()方法只會(huì)返回第一個(gè)節(jié)點(diǎn)的HTML文本,如果要獲取所有就需要使用items()方法來(lái)遍歷獲取了

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

from pyquery import PyQuery as pqhtml='''<div id="container"> <ul class="list"> <li class="item-0">first item</li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-0 active"><a href="link3.html"><span class="bold">third item</span></a></li> <li class="item-1 active"><a href="link4.html">fourth item</a></li> <li class="item-0"><a href="link5.html">fifth item</a></li> </ul> </div>'''doc=pq(html,parser='html')print('text:',doc('li').text()) #獲取li節(jié)點(diǎn)下的所有文本信息lis=doc('li').items()for i in lis: print('html:',i.html()) #獲取所有l(wèi)i節(jié)點(diǎn)下的HTML文本#text: first item second item third item fourth item fifth itemhtml: first itemhtml: <a href="link2.html">second item</a>html: <a href="link3.html"><span class="bold">third item</span></a>html: <a href="link4.html">fourth item</a>html: <a href="link5.html">fifth item</a> |

7、節(jié)點(diǎn)操作

pyquery提供了一系列方法來(lái)對(duì)節(jié)點(diǎn)進(jìn)行動(dòng)態(tài)修改,如添加一個(gè)class,移除某個(gè)節(jié)點(diǎn),修改某個(gè)屬性的值

addClass()增加Class,removeClass()刪除Class

attr()增加屬性和值,text()增加文本內(nèi)容,html()增加HTML文本,remove()移除

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

from pyquery import PyQuery as pqimport requestshtml='''<div id="container"> <ul class="list"> <li id="1">first item</li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-2 active"><a href="link3.html"><span class="bold">third item</span></a></li> <li class="item-3 active"><a href="link4.html">fourth item</a></li> <li class="item-4"><a href="link5.html">fifth item</a></li> </ul> </div>'''doc=pq(html,parser='html')print(doc('#1'))print(doc('#1').add_class('myclass')) #增加Classprint(doc('.item-1').remove_class('item-1')) #刪除Classprint(doc('#1').attr('name','link')) #添加屬性name=linkprint(doc('#1').text('hello world')) #添加文本print(doc('#1').html('<span>changed item</span>')) #添加HTML文本print(doc('.item-2.active a').remove('span')) #刪除節(jié)點(diǎn)#<li id="1">first item</li> <li id="1" class="myclass">first item</li> <li class=""><a href="link2.html">second item</a></li> <li id="1" class="myclass" name="link">first item</li> <li id="1" class="myclass" name="link">hello world</li> <li id="1" class="myclass" name="link"><span>changed item</span></li><a href="link3.html"/> |

after()在節(jié)點(diǎn)后添加值

before()在節(jié)點(diǎn)之前插入值

append()將值添加到每個(gè)節(jié)點(diǎn)

contents()返回文本節(jié)點(diǎn)內(nèi)容

empty()刪除節(jié)點(diǎn)內(nèi)容

remove_attr()刪除屬性

val()設(shè)置或獲取屬性值

另外還有很多節(jié)點(diǎn)操作方法,它們和jQuery的用法完全一致,詳細(xì)請(qǐng)參考:http://pyquery.readthedocs.io/en/latest/api.html

8、偽類選擇器

CSS選擇器之所以強(qiáng)大,是因?yàn)樗С侄喾N多樣的偽類選擇器,如:選擇第一個(gè)節(jié)點(diǎn),最后一個(gè)節(jié)點(diǎn),奇偶數(shù)節(jié)點(diǎn)等。

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

#!/usr/bin/env python#coding:utf-8from pyquery import PyQuery as pqhtml='''<div id="container"> <ul class="list"> <li id="1">first item</li> <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-2 active"><a href="link3.html"><span class="bold">third item</span></a></li> <li class="item-3 active"><a href="link4.html">fourth item</a></li> <li class="item-4"><a href="link5.html">fifth item</a></li> </ul> <div><input type="text" value="username"/></div> </div>'''doc=pq(html,parser='html')print('第一個(gè)li節(jié)點(diǎn):',doc('li:first-child')) #第一個(gè)li節(jié)點(diǎn)print('最后一個(gè)li節(jié)點(diǎn):',doc('li:last_child')) #最后一個(gè)li節(jié)點(diǎn)print('第二個(gè)li節(jié)點(diǎn):',doc('li:nth-child(2)')) #第二個(gè)li節(jié)點(diǎn)print('第三個(gè)之后的所有l(wèi)i節(jié)點(diǎn):',doc('li:gt(2)')) #第三個(gè)之后的所有l(wèi)i節(jié)點(diǎn)print('偶數(shù)的所有l(wèi)i節(jié)點(diǎn):',doc('li:nth-child(2n)')) #偶數(shù)的所有l(wèi)i節(jié)點(diǎn)print('包含文本內(nèi)容的節(jié)點(diǎn):',doc('li:contains(second)')) #包含文本內(nèi)容的節(jié)點(diǎn)print('索引第一個(gè)節(jié)點(diǎn):',doc('li:eq(0)'))print('奇數(shù)節(jié)點(diǎn):',doc('li:even'))print('偶數(shù)節(jié)點(diǎn):',doc('li:odd'))#第一個(gè)li節(jié)點(diǎn): <li id="1">first item</li> 最后一個(gè)li節(jié)點(diǎn): <li class="item-4"><a href="link5.html">fifth item</a></li> 第二個(gè)li節(jié)點(diǎn): <li class="item-1"><a href="link2.html">second item</a></li> 第三個(gè)之后的所有l(wèi)i節(jié)點(diǎn): <li class="item-3 active"><a href="link4.html">fourth item</a></li> <li class="item-4"><a href="link5.html">fifth item</a></li> 偶數(shù)的所有l(wèi)i節(jié)點(diǎn): <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-3 active"><a href="link4.html">fourth item</a></li> 包含文本內(nèi)容的節(jié)點(diǎn): <li class="item-1"><a href="link2.html">second item</a></li> 索引第一個(gè)節(jié)點(diǎn): <li id="1">first item</li> 奇數(shù)節(jié)點(diǎn): <li id="1">first item</li> <li class="item-2 active"><a href="link3.html"><span class="bold">third item</span></a></li> <li class="item-4"><a href="link5.html">fifth item</a></li> 偶數(shù)節(jié)點(diǎn): <li class="item-1"><a href="link2.html">second item</a></li> <li class="item-3 active"><a href="link4.html">fourth item</a></li> |

更多偽類參考:http://pyquery.readthedocs.io/en/latest/pseudo_classes.html

更多css選擇器參考:http://www.w3school.com.cn/cssref/css_selectors.asp

9、實(shí)例應(yīng)用

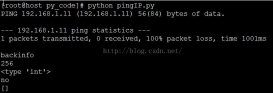

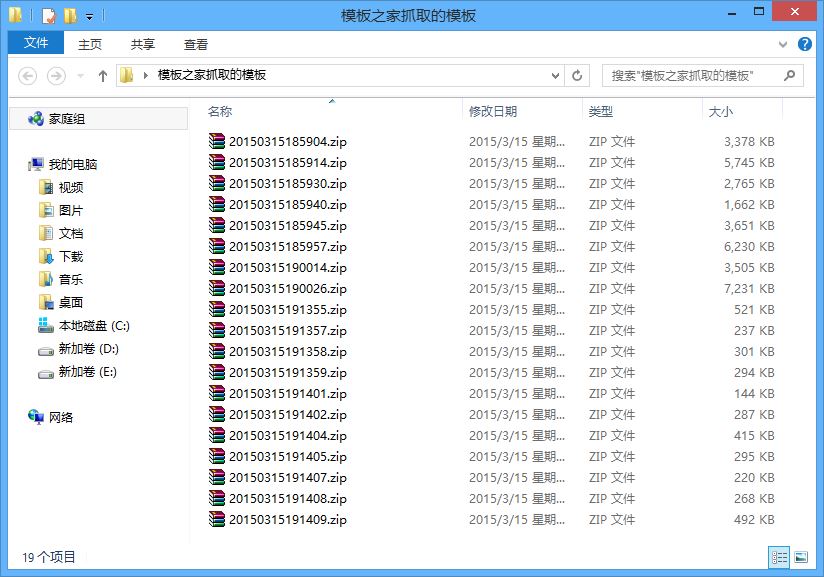

抓取http://www.mzitu.com網(wǎng)站美女圖片12萬(wàn)張用時(shí)28分鐘,總大小9G,主要受網(wǎng)絡(luò)帶寬影響,下載數(shù)據(jù)有點(diǎn)慢

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

|

#!/usr/bin/env python#coding:utf-8import requestsfrom requests.exceptions import RequestExceptionfrom pyquery import PyQuery as pqfrom PIL import Imagefrom PIL import ImageFilefrom io import BytesIOimport timefrom multiprocessing import Pool,freeze_supportImageFile.LOAD_TRUNCATED_IMAGES = Trueheaders={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36','Referer':'http://www.mzitu.com'}img_headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.62 Safari/537.36','Referer':'http://i.meizitu.net'}#保持會(huì)話請(qǐng)求sesion=requests.Session()#獲取首頁(yè)所有URL并返回列表def get_url(url): list_url = [] try: html=sesion.get(url,headers=headers).text doc=pq(html,parser='html') url_path=doc('#pins > li').children('a') for i in url_path.items(): list_url.append(i.attr('href')) except RequestException as e: print('get_url_RequestException:',e) except Exception as e: print('get_url_Exception:',e) return list_url#組合首頁(yè)中每個(gè)地址的圖片分頁(yè)返回列表def list_get_pages(list_url): list_url_fen=[] try: for i in list_url: doc_children = pq(sesion.get(i,headers=headers).text,parser='html') img_number = doc_children('body > div.main > div.content > div.pagenavi > a:nth-child(7) > span').text() number=int(img_number.strip()) for j in range(1,number+1): list_url_fen.append(i+'/'+str(j)) except ValueError as e: print('list_get_pages_ValueError:',e) except RequestException as e: print('list_get_pages_RequestException',e) except Exception as e: print('list_get_pages_Exception:',e) return list_url_fen#獲取image地址并下載圖片def get_image(url): im_path='' try: html=sesion.get(url, headers=headers).text doc=pq(html,parser='html') im_path=doc('.main-image a img').attr('src') image_names = ''.join(im_path.split('/')[-3:]) image_path = 'D:\images\\' + image_names with open('img_url.txt','a') as f: f.write(im_path + '\n') r=requests.get(im_path,headers=img_headers) b=BytesIO(r.content) i=Image.open(b) i.save(image_path) b.close() i.close() #print('下載圖片:{}成功!'.format(image_names)) except RequestException as e: print('RequestException:',e) except OSError as e: print('OSError:',e) except Exception as e: #必須捕獲所有異常,運(yùn)行中有一些鏈接地址不符合抓取規(guī)律,需要捕獲異常使程序正常運(yùn)行 print('Exception:',e) return im_path#主調(diào)用函數(shù)def main(item): url1='http://www.mzitu.com/page/{}'.format(item) #分頁(yè)地址 print('開始下載地址:{}'.format(url1)) 獲取首頁(yè)鏈接地址 html=get_url(url1) #獲取分頁(yè)鏈接地址 list_fenurl = list_get_pages(html) #根據(jù)分頁(yè)鏈接地址獲取圖片并下載 for i in list_fenurl: get_image(i) return len(list_fenurl) #統(tǒng)計(jì)下載數(shù)if __name__ == '__main__': freeze_support() #windows下進(jìn)程調(diào)用時(shí)必須添加 pool=Pool() #創(chuàng)建進(jìn)程池 start=time.time() count=pool.map(main,[i for i in range(1,185)]) #多進(jìn)程運(yùn)行翻頁(yè)主頁(yè) print(sum(count),count) #獲取總的下載數(shù) end=time.time() data=time.strftime('%M:%S',time.localtime(end-start)) #獲取程序運(yùn)行時(shí)間 print('程序運(yùn)行時(shí)間:{}分{}秒'.format(*data.split(':')))#學(xué)習(xí)階段,代碼寫得通用性很差,以后改進(jìn)!#運(yùn)行結(jié)果#會(huì)有幾個(gè)報(bào)錯(cuò)都忽略了是獲取文件名時(shí)的分割問(wèn)題和在圖片很少的情況下導(dǎo)致獲取不到單分頁(yè)圖片的數(shù)目,先忽略以后有時(shí)間再改正#Exception: 'NoneType' object has no attribute 'split'#list_get_pages_ValueError: invalid literal for int() with base 10: '下一頁(yè)»'開始下載地址:http://www.mzitu.com/page/137OSError: image file is truncated (22 bytes not processed)開始下載地址:http://www.mzitu.com/page/138程序運(yùn)行時(shí)間:28分27秒進(jìn)程完成,退出碼 0 |

pyquery相關(guān)鏈接:

GitHub:https://github.com/gawel/pyquery

PyPI:https://pypi.python.org/pypi/pyquery

官方文檔:http://pyquery.readthedocs.io

總結(jié)

以上就是這篇文章的全部?jī)?nèi)容了,希望本文的內(nèi)容對(duì)大家的學(xué)習(xí)或者工作具有一定的參考學(xué)習(xí)價(jià)值,如果有疑問(wèn)大家可以留言交流,謝謝大家對(duì)服務(wù)器之家的支持。

原文鏈接:https://www.cnblogs.com/zhangxinqi/p/9219476.html