1.創建tfrecord

tfrecord支持寫入三種格式的數據:string,int64,float32,以列表的形式分別通過tf.train.BytesList、tf.train.Int64List、tf.train.FloatList寫入tf.train.Feature,如下所示:

|

1

2

3

|

tf.train.Feature(bytes_list=tf.train.BytesList(value=[feature.tostring()])) #feature一般是多維數組,要先轉為listtf.train.Feature(int64_list=tf.train.Int64List(value=list(feature.shape))) #tostring函數后feature的形狀信息會丟失,把shape也寫入tf.train.Feature(float_list=tf.train.FloatList(value=[label])) |

通過上述操作,以dict的形式把要寫入的數據匯總,并構建tf.train.Features,然后構建tf.train.Example,如下:

|

1

2

3

4

5

6

7

|

def get_tfrecords_example(feature, label): tfrecords_features = {} feat_shape = feature.shape tfrecords_features['feature'] = tf.train.Feature(bytes_list=tf.train.BytesList(value=[feature.tostring()])) tfrecords_features['shape'] = tf.train.Feature(int64_list=tf.train.Int64List(value=list(feat_shape))) tfrecords_features['label'] = tf.train.Feature(float_list=tf.train.FloatList(value=label)) return tf.train.Example(features=tf.train.Features(feature=tfrecords_features)) |

把創建的tf.train.Example序列化下,便可通過tf.python_io.TFRecordWriter寫入tfrecord文件,如下:

|

1

2

3

4

5

|

tfrecord_wrt = tf.python_io.TFRecordWriter('xxx.tfrecord') #創建tfrecord的writer,文件名為xxxexmp = get_tfrecords_example(feats[inx], labels[inx]) #把數據寫入Exampleexmp_serial = exmp.SerializeToString() #Example序列化tfrecord_wrt.write(exmp_serial) #寫入tfrecord文件tfrecord_wrt.close() #寫完后關閉tfrecord的writer |

代碼匯總:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

import tensorflow as tffrom tensorflow.contrib.learn.python.learn.datasets.mnist import read_data_sets mnist = read_data_sets("MNIST_data/", one_hot=True)#把數據寫入Exampledef get_tfrecords_example(feature, label): tfrecords_features = {} feat_shape = feature.shape tfrecords_features['feature'] = tf.train.Feature(bytes_list=tf.train.BytesList(value=[feature.tostring()])) tfrecords_features['shape'] = tf.train.Feature(int64_list=tf.train.Int64List(value=list(feat_shape))) tfrecords_features['label'] = tf.train.Feature(float_list=tf.train.FloatList(value=label)) return tf.train.Example(features=tf.train.Features(feature=tfrecords_features))#把所有數據寫入tfrecord文件def make_tfrecord(data, outf_nm='mnist-train'): feats, labels = data outf_nm += '.tfrecord' tfrecord_wrt = tf.python_io.TFRecordWriter(outf_nm) ndatas = len(labels) for inx in range(ndatas): exmp = get_tfrecords_example(feats[inx], labels[inx]) exmp_serial = exmp.SerializeToString() tfrecord_wrt.write(exmp_serial) tfrecord_wrt.close() import randomnDatas = len(mnist.train.labels)inx_lst = range(nDatas)random.shuffle(inx_lst)random.shuffle(inx_lst)ntrains = int(0.85*nDatas) # make training setdata = ([mnist.train.images[i] for i in inx_lst[:ntrains]], \ [mnist.train.labels[i] for i in inx_lst[:ntrains]])make_tfrecord(data, outf_nm='mnist-train') # make validation setdata = ([mnist.train.images[i] for i in inx_lst[ntrains:]], \ [mnist.train.labels[i] for i in inx_lst[ntrains:]])make_tfrecord(data, outf_nm='mnist-val') # make test setdata = (mnist.test.images, mnist.test.labels)make_tfrecord(data, outf_nm='mnist-test') |

2.tfrecord文件的使用:tf.data.TFRecordDataset

從tfrecord文件創建TFRecordDataset:

|

1

|

dataset = tf.data.TFRecordDataset('xxx.tfrecord') |

解析tfrecord文件的每條記錄,即序列化后的tf.train.Example;使用tf.parse_single_example來解析:

|

1

|

feats = tf.parse_single_example(serial_exmp, features=data_dict) |

其中,data_dict是一個dict,包含的key是寫入tfrecord文件時用的key,相應的value則是tf.FixedLenFeature([], tf.string)、tf.FixedLenFeature([], tf.int64)、tf.FixedLenFeature([], tf.float32),分別對應不同的數據類型,匯總即有:

|

1

2

3

4

5

6

7

|

def parse_exmp(serial_exmp): #label中[10]是因為一個label是一個有10個元素的列表,shape中的[x]為shape的長度feats = tf.parse_single_example(serial_exmp, features={'feature':tf.FixedLenFeature([], tf.string),\ 'label':tf.FixedLenFeature([10],tf.float32), 'shape':tf.FixedLenFeature([x], tf.int64)})image = tf.decode_raw(feats['feature'], tf.float32)label = feats['label']shape = tf.cast(feats['shape'], tf.int32)return image, label, shape |

解析tfrecord文件中的所有記錄,使用dataset的map方法,如下:

|

1

|

dataset = dataset.map(parse_exmp) |

map方法可以接受任意函數以對dataset中的數據進行處理;另外,可使用repeat、shuffle、batch方法對dataset進行重復、混洗、分批;用repeat復制dataset以進行多個epoch;如下:

|

1

|

dataset = dataset.repeat(epochs).shuffle(buffer_size).batch(batch_size) |

解析完數據后,便可以取出數據進行使用,通過創建iterator來進行,如下:

|

1

2

|

iterator = dataset.make_one_shot_iterator()batch_image, batch_label, batch_shape = iterator.get_next() |

要把不同dataset的數據feed進行模型,則需要先創建iterator handle,即iterator placeholder,如下:

|

1

2

3

4

|

handle = tf.placeholder(tf.string, shape=[])iterator = tf.data.Iterator.from_string_handle(handle, \ dataset_train.output_types, dataset_train.output_shapes)image, label, shape = iterator.get_next() |

然后為各個dataset創建handle,以feed_dict傳入placeholder,如下:

|

1

2

3

4

|

with tf.Session() as sess: handle_train, handle_val, handle_test = sess.run(\ [x.string_handle() for x in [iter_train, iter_val, iter_test]]) sess.run([loss, train_op], feed_dict={handle: handle_train} |

匯總:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

|

import tensorflow as tf train_f, val_f, test_f = ['mnist-%s.tfrecord'%i for i in ['train', 'val', 'test']] def parse_exmp(serial_exmp): feats = tf.parse_single_example(serial_exmp, features={'feature':tf.FixedLenFeature([], tf.string),\ 'label':tf.FixedLenFeature([10],tf.float32), 'shape':tf.FixedLenFeature([], tf.int64)}) image = tf.decode_raw(feats['feature'], tf.float32) label = feats['label'] shape = tf.cast(feats['shape'], tf.int32) return image, label, shape def get_dataset(fname): dataset = tf.data.TFRecordDataset(fname) return dataset.map(parse_exmp) # use padded_batch method if padding needed epochs = 16batch_size = 50 # when batch_size can't be divided by nDatas, like 56, # there will be a batch data with nums less than batch_size # training datasetnDatasTrain = 46750dataset_train = get_dataset(train_f)dataset_train = dataset_train.repeat(epochs).shuffle(1000).batch(batch_size) # make sure repeat is ahead batch # this is different from dataset.shuffle(1000).batch(batch_size).repeat(epochs) # the latter means that there will be a batch data with nums less than batch_size for each epoch # if when batch_size can't be divided by nDatas.nBatchs = nDatasTrain*epochs//batch_size # evalation datasetnDatasVal = 8250dataset_val = get_dataset(val_f)dataset_val = dataset_val.batch(nDatasVal).repeat(nBatchs//100*2) # test datasetnDatasTest = 10000dataset_test = get_dataset(test_f)dataset_test = dataset_test.batch(nDatasTest) # make dataset iteratoriter_train = dataset_train.make_one_shot_iterator()iter_val = dataset_val.make_one_shot_iterator()iter_test = dataset_test.make_one_shot_iterator() # make feedable iteratorhandle = tf.placeholder(tf.string, shape=[])iterator = tf.data.Iterator.from_string_handle(handle, \ dataset_train.output_types, dataset_train.output_shapes)x, y_, _ = iterator.get_next()train_op, loss, eval_op = model(x, y_)init = tf.initialize_all_variables() # summarylogdir = './logs/m4d2a'def summary_op(datapart='train'): tf.summary.scalar(datapart + '-loss', loss) tf.summary.scalar(datapart + '-eval', eval_op) return tf.summary.merge_all() summary_op_train = summary_op()summary_op_test = summary_op('val') with tf.Session() as sess: sess.run(init) handle_train, handle_val, handle_test = sess.run(\ [x.string_handle() for x in [iter_train, iter_val, iter_test]]) _, cur_loss, cur_train_eval, summary = sess.run([train_op, loss, eval_op, summary_op_train], \ feed_dict={handle: handle_train, keep_prob: 0.5} ) cur_val_loss, cur_val_eval, summary = sess.run([loss, eval_op, summary_op_test], \ feed_dict={handle: handle_val, keep_prob: 1.0}) |

3.mnist實驗

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

|

import tensorflow as tf train_f, val_f, test_f = ['mnist-%s.tfrecord'%i for i in ['train', 'val', 'test']] def parse_exmp(serial_exmp): feats = tf.parse_single_example(serial_exmp, features={'feature':tf.FixedLenFeature([], tf.string),\ 'label':tf.FixedLenFeature([10],tf.float32), 'shape':tf.FixedLenFeature([], tf.int64)}) image = tf.decode_raw(feats['feature'], tf.float32) label = feats['label'] shape = tf.cast(feats['shape'], tf.int32) return image, label, shape def get_dataset(fname): dataset = tf.data.TFRecordDataset(fname) return dataset.map(parse_exmp) # use padded_batch method if padding needed epochs = 16batch_size = 50 # when batch_size can't be divided by nDatas, like 56, # there will be a batch data with nums less than batch_size # training datasetnDatasTrain = 46750dataset_train = get_dataset(train_f)dataset_train = dataset_train.repeat(epochs).shuffle(1000).batch(batch_size) # make sure repeat is ahead batch # this is different from dataset.shuffle(1000).batch(batch_size).repeat(epochs) # the latter means that there will be a batch data with nums less than batch_size for each epoch # if when batch_size can't be divided by nDatas.nBatchs = nDatasTrain*epochs//batch_size # evalation datasetnDatasVal = 8250dataset_val = get_dataset(val_f)dataset_val = dataset_val.batch(nDatasVal).repeat(nBatchs//100*2) # test datasetnDatasTest = 10000dataset_test = get_dataset(test_f)dataset_test = dataset_test.batch(nDatasTest) # make dataset iteratoriter_train = dataset_train.make_one_shot_iterator()iter_val = dataset_val.make_one_shot_iterator()iter_test = dataset_test.make_one_shot_iterator() # make feedable iterator, i.e. iterator placeholderhandle = tf.placeholder(tf.string, shape=[])iterator = tf.data.Iterator.from_string_handle(handle, \ dataset_train.output_types, dataset_train.output_shapes)x, y_, _ = iterator.get_next() # cnnx_image = tf.reshape(x, [-1,28,28,1])w_init = tf.truncated_normal_initializer(stddev=0.1, seed=9)b_init = tf.constant_initializer(0.1)cnn1 = tf.layers.conv2d(x_image, 32, (5,5), padding='same', activation=tf.nn.relu, \ kernel_initializer=w_init, bias_initializer=b_init)mxpl1 = tf.layers.max_pooling2d(cnn1, 2, strides=2, padding='same')cnn2 = tf.layers.conv2d(mxpl1, 64, (5,5), padding='same', activation=tf.nn.relu, \ kernel_initializer=w_init, bias_initializer=b_init)mxpl2 = tf.layers.max_pooling2d(cnn2, 2, strides=2, padding='same')mxpl2_flat = tf.reshape(mxpl2, [-1,7*7*64])fc1 = tf.layers.dense(mxpl2_flat, 1024, activation=tf.nn.relu, \ kernel_initializer=w_init, bias_initializer=b_init)keep_prob = tf.placeholder('float')fc1_drop = tf.nn.dropout(fc1, keep_prob)logits = tf.layers.dense(fc1_drop, 10, kernel_initializer=w_init, bias_initializer=b_init) loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=logits, labels=y_))optmz = tf.train.AdamOptimizer(1e-4)train_op = optmz.minimize(loss) def get_eval_op(logits, labels): corr_prd = tf.equal(tf.argmax(logits,1), tf.argmax(labels,1)) return tf.reduce_mean(tf.cast(corr_prd, 'float'))eval_op = get_eval_op(logits, y_) init = tf.initialize_all_variables() # summarylogdir = './logs/m4d2a'def summary_op(datapart='train'): tf.summary.scalar(datapart + '-loss', loss) tf.summary.scalar(datapart + '-eval', eval_op) return tf.summary.merge_all() summary_op_train = summary_op()summary_op_val = summary_op('val') # whether to restore or notckpts_dir = 'ckpts/'ckpt_nm = 'cnn-ckpt'saver = tf.train.Saver(max_to_keep=50) # defaults to save all variables, using dict {'x':x,...} to save specified ones.restore_step = ''start_step = 0train_steps = nBatchsbest_loss = 1e6best_step = 0 # import os# os.environ["CUDA_VISIBLE_DEVICES"] = "0"# config = tf.ConfigProto() # config.gpu_options.per_process_gpu_memory_fraction = 0.9# config.gpu_options.allow_growth=True # allocate when needed# with tf.Session(config=config) as sess:with tf.Session() as sess: sess.run(init) handle_train, handle_val, handle_test = sess.run(\ [x.string_handle() for x in [iter_train, iter_val, iter_test]]) if restore_step: ckpt = tf.train.get_checkpoint_state(ckpts_dir) if ckpt and ckpt.model_checkpoint_path: # ckpt.model_checkpoint_path means the latest ckpt if restore_step == 'latest': ckpt_f = tf.train.latest_checkpoint(ckpts_dir) start_step = int(ckpt_f.split('-')[-1]) + 1 else: ckpt_f = ckpts_dir+ckpt_nm+'-'+restore_step print('loading wgt file: '+ ckpt_f) saver.restore(sess, ckpt_f) summary_wrt = tf.summary.FileWriter(logdir,sess.graph) if restore_step in ['', 'latest']: for i in range(start_step, train_steps): _, cur_loss, cur_train_eval, summary = sess.run([train_op, loss, eval_op, summary_op_train], \ feed_dict={handle: handle_train, keep_prob: 0.5} ) # log to stdout and eval validation set if i % 100 == 0 or i == train_steps-1: saver.save(sess, ckpts_dir+ckpt_nm, global_step=i) # save variables summary_wrt.add_summary(summary, global_step=i) cur_val_loss, cur_val_eval, summary = sess.run([loss, eval_op, summary_op_val], \ feed_dict={handle: handle_val, keep_prob: 1.0}) if cur_val_loss < best_loss: best_loss = cur_val_loss best_step = i summary_wrt.add_summary(summary, global_step=i) print 'step %5d: loss %.5f, acc %.5f --- loss val %0.5f, acc val %.5f'%(i, \ cur_loss, cur_train_eval, cur_val_loss, cur_val_eval) # sess.run(init_train) with open(ckpts_dir+'best.step','w') as f: f.write('best step is %d\n'%best_step) print 'best step is %d'%best_step # eval test set test_loss, test_eval = sess.run([loss, eval_op], feed_dict={handle: handle_test, keep_prob: 1.0}) print 'eval test: loss %.5f, acc %.5f'%(test_loss, test_eval) |

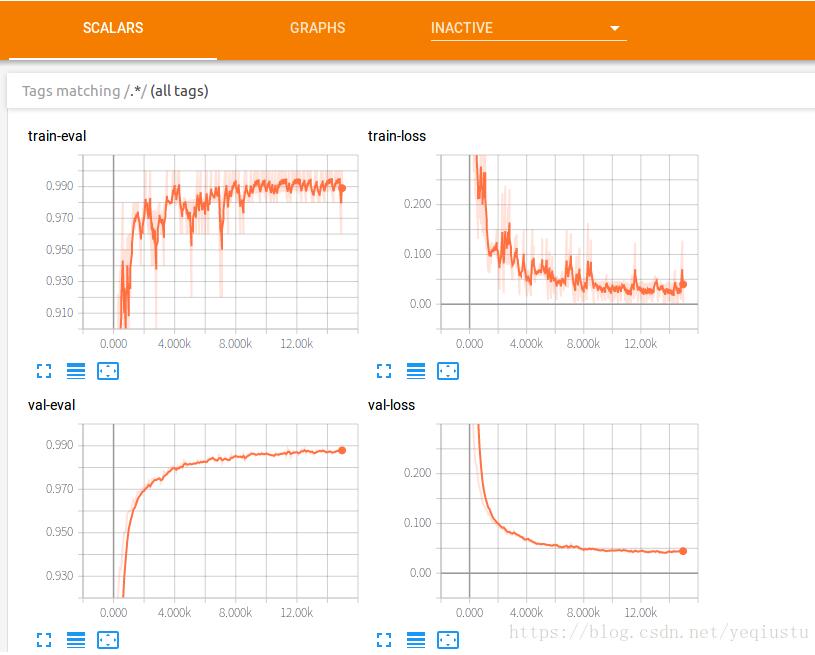

實驗結果:

以上這篇tensorflow入門:tfrecord 和tf.data.TFRecordDataset的使用就是小編分享給大家的全部內容了,希望能給大家一個參考,也希望大家多多支持服務器之家。

原文鏈接:https://blog.csdn.net/yeqiustu/article/details/79793454