本文實例為大家分享了基于神經卷積網絡的人臉識別,供大家參考,具體內容如下

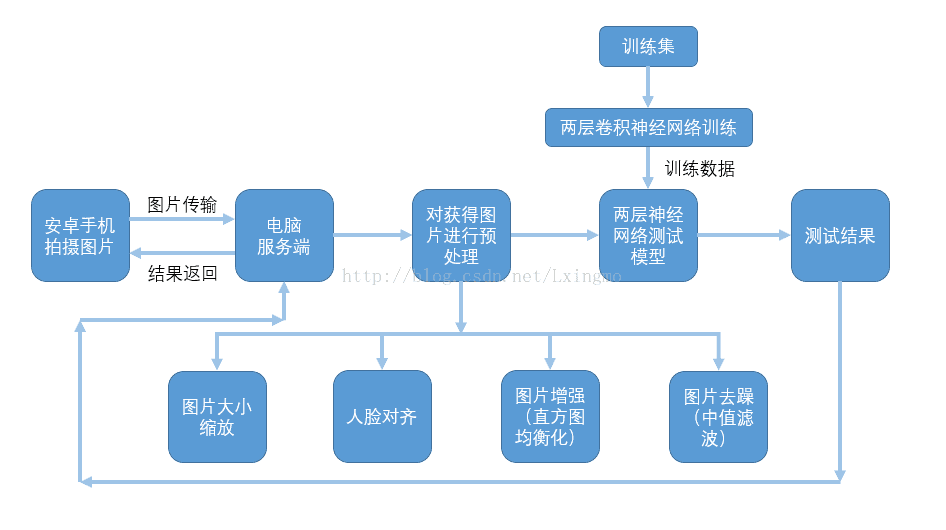

1.人臉識別整體設計方案

客_服交互流程圖:

2.服務端代碼展示

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

sk = socket.socket() # s.bind(address) 將套接字綁定到地址。在af_inet下,以元組(host,port)的形式表示地址。 sk.bind(("172.29.25.11",8007)) # 開始監聽傳入連接。 sk.listen(true) while true: for i in range(100): # 接受連接并返回(conn,address),conn是新的套接字對象,可以用來接收和發送數據。address是連接客戶端的地址。 conn,address = sk.accept() # 建立圖片存儲路徑 path = str(i+1) + '.jpg' # 接收圖片大小(字節數) size = conn.recv(1024) size_str = str(size,encoding="utf-8") size_str = size_str[2 :] file_size = int(size_str) # 響應接收完成 conn.sendall(bytes('finish', encoding="utf-8")) # 已經接收數據大小 has_size has_size = 0 # 創建圖片并寫入數據 f = open(path,"wb") while true: # 獲取 if file_size == has_size: break date = conn.recv(1024) f.write(date) has_size += len(date) f.close() # 圖片縮放 resize(path) # cut_img(path):圖片裁剪成功返回true;失敗返回false if cut_img(path): yuchuli() result = test('test.jpg') conn.sendall(bytes(result,encoding="utf-8")) else: print('falue') conn.sendall(bytes('人眼檢測失敗,請保持圖片眼睛清晰',encoding="utf-8")) conn.close() |

3.圖片預處理

1)圖片縮放

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

# 根據圖片大小等比例縮放圖片 def resize(path): image=cv2.imread(path,0) row,col = image.shape if row >= 2500: x,y = int(row/5),int(col/5) elif row >= 2000: x,y = int(row/4),int(col/4) elif row >= 1500: x,y = int(row/3),int(col/3) elif row >= 1000: x,y = int(row/2),int(col/2) else: x,y = row,col # 縮放函數 res=cv2.resize(image,(y,x),interpolation=cv2.inter_cubic) cv2.imwrite(path,res) |

2)直方圖均衡化和中值濾波

|

1

2

3

4

|

# 直方圖均衡化 eq = cv2.equalizehist(img) # 中值濾波 lbimg=cv2.medianblur(eq,3) |

3)人眼檢測

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

# -*- coding: utf-8 -*- # 檢測人眼,返回眼睛數據 import numpy as np import cv2 def eye_test(path): # 待檢測的人臉路徑 imagepath = path # 獲取訓練好的人臉參數 eyeglasses_cascade = cv2.cascadeclassifier('haarcascade_eye_tree_eyeglasses.xml') # 讀取圖片 img = cv2.imread(imagepath) # 轉為灰度圖像 gray = cv2.cvtcolor(img,cv2.color_bgr2gray) # 檢測并獲取人眼數據 eyeglasses = eyeglasses_cascade.detectmultiscale(gray) # 人眼數為2時返回左右眼位置數據 if len(eyeglasses) == 2: num = 0 for (e_gx,e_gy,e_gw,e_gh) in eyeglasses: cv2.rectangle(img,(e_gx,e_gy),(e_gx+int(e_gw/2),e_gy+int(e_gh/2)),(0,0,255),2) if num == 0: x1,y1 = e_gx+int(e_gw/2),e_gy+int(e_gh/2) else: x2,y2 = e_gx+int(e_gw/2),e_gy+int(e_gh/2) num += 1 print('eye_test') return x1,y1,x2,y2 else: return false |

4)人眼對齊并裁剪

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

|

# -*- coding: utf-8 -*- # 人眼對齊并裁剪 # 參數含義: # cropface(image, eye_left, eye_right, offset_pct, dest_sz) # eye_left is the position of the left eye # eye_right is the position of the right eye # 比例的含義為:要保留的圖像靠近眼鏡的百分比, # offset_pct is the percent of the image you want to keep next to the eyes (horizontal, vertical direction) # 最后保留的圖像的大小。 # dest_sz is the size of the output image # import sys,math from pil import image from eye_test import eye_test # 計算兩個坐標的距離 def distance(p1,p2): dx = p2[0]- p1[0] dy = p2[1]- p1[1] return math.sqrt(dx*dx+dy*dy) # 根據參數,求仿射變換矩陣和變換后的圖像。 def scalerotatetranslate(image, angle, center =none, new_center =none, scale =none, resample=image.bicubic): if (scale is none)and (center is none): return image.rotate(angle=angle, resample=resample) nx,ny = x,y = center sx=sy=1.0 if new_center: (nx,ny) = new_center if scale: (sx,sy) = (scale, scale) cosine = math.cos(angle) sine = math.sin(angle) a = cosine/sx b = sine/sx c = x-nx*a-ny*b d =-sine/sy e = cosine/sy f = y-nx*d-ny*e return image.transform(image.size, image.affine, (a,b,c,d,e,f), resample=resample) # 根據所給的人臉圖像,眼睛坐標位置,偏移比例,輸出的大小,來進行裁剪。 def cropface(image, eye_left=(0,0), eye_right=(0,0), offset_pct=(0.2,0.2), dest_sz = (70,70)): # calculate offsets in original image 計算在原始圖像上的偏移。 offset_h = math.floor(float(offset_pct[0])*dest_sz[0]) offset_v = math.floor(float(offset_pct[1])*dest_sz[1]) # get the direction 計算眼睛的方向。 eye_direction = (eye_right[0]- eye_left[0], eye_right[1]- eye_left[1]) # calc rotation angle in radians 計算旋轉的方向弧度。 rotation =-math.atan2(float(eye_direction[1]),float(eye_direction[0])) # distance between them # 計算兩眼之間的距離。 dist = distance(eye_left, eye_right) # calculate the reference eye-width 計算最后輸出的圖像兩只眼睛之間的距離。 reference = dest_sz[0]-2.0*offset_h # scale factor # 計算尺度因子。 scale =float(dist)/float(reference) # rotate original around the left eye # 原圖像繞著左眼的坐標旋轉。 image = scalerotatetranslate(image, center=eye_left, angle=rotation) # crop the rotated image # 剪切 crop_xy = (eye_left[0]- scale*offset_h, eye_left[1]- scale*offset_v) # 起點 crop_size = (dest_sz[0]*scale, dest_sz[1]*scale) # 大小 image = image.crop((int(crop_xy[0]),int(crop_xy[1]),int(crop_xy[0]+crop_size[0]),int(crop_xy[1]+crop_size[1]))) # resize it 重置大小 image = image.resize(dest_sz, image.antialias) return image def cut_img(path): image = image.open(path) # 人眼識別成功返回true;否則,返回false if eye_test(path): print('cut_img') # 獲取人眼數據 leftx,lefty,rightx,righty = eye_test(path) # 確定左眼和右眼位置 if leftx > rightx: temp_x,temp_y = leftx,lefty leftx,lefty = rightx,righty rightx,righty = temp_x,temp_y # 進行人眼對齊并保存截圖 cropface(image, eye_left=(leftx,lefty), eye_right=(rightx,righty), offset_pct=(0.30,0.30), dest_sz=(92,112)).save('test.jpg') return true else: print('falue') return false |

4.用神經卷積網絡訓練數據

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

|

# -*- coding: utf-8 -*- from numpy import *import cv2 import tensorflow as tf # 圖片大小 type = 112*92# 訓練人數 peoplenum = 42# 每人訓練圖片數 trainnum = 15 #( train_face_num ) # 單人訓練人數加測試人數 each = 21 #( test_face_num + train_face_num ) # 2維=>1維 def img2vector1(filename): img = cv2.imread(filename,0) row,col = img.shape vector1 = zeros((1,row*col)) vector1 = reshape(img,(1,row*col)) return vector1 # 獲取人臉數據 def readdata(k): path = 'face_flip/' train_face = zeros((peoplenum*k,type),float32) train_face_num = zeros((peoplenum*k,peoplenum)) test_face = zeros((peoplenum*(each-k),type),float32) test_face_num = zeros((peoplenum*(each-k),peoplenum)) # 建立42個人的訓練人臉集和測試人臉集 for i in range(peoplenum): # 單前獲取人 people_num = i + 1 for j in range(k): #獲取圖片路徑 filename = path + 's' + str(people_num) + '/' + str(j+1) + '.jpg' #2維=>1維 img = img2vector1(filename) #train_face:每一行為一幅圖的數據;train_face_num:儲存每幅圖片屬于哪個人 train_face[i*k+j,:] = img/255 train_face_num[i*k+j,people_num-1] = 1 for j in range(k,each): #獲取圖片路徑 filename = path + 's' + str(people_num) + '/' + str(j+1) + '.jpg' #2維=>1維 img = img2vector1(filename) # test_face:每一行為一幅圖的數據;test_face_num:儲存每幅圖片屬于哪個人 test_face[i*(each-k)+(j-k),:] = img/255 test_face_num[i*(each-k)+(j-k),people_num-1] = 1 return train_face,train_face_num,test_face,test_face_num # 獲取訓練和測試人臉集與對應lable train_face,train_face_num,test_face,test_face_num = readdata(trainnum) # 計算測試集成功率 def compute_accuracy(v_xs, v_ys): global prediction y_pre = sess.run(prediction, feed_dict={xs: v_xs, keep_prob: 1}) correct_prediction = tf.equal(tf.argmax(y_pre,1), tf.argmax(v_ys,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) result = sess.run(accuracy, feed_dict={xs: v_xs, ys: v_ys, keep_prob: 1}) return result # 神經元權重 def weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1) return tf.variable(initial) # 神經元偏置 def bias_variable(shape): initial = tf.constant(0.1, shape=shape) return tf.variable(initial) # 卷積 def conv2d(x, w): # stride [1, x_movement, y_movement, 1] # must have strides[0] = strides[3] = 1 return tf.nn.conv2d(x, w, strides=[1, 1, 1, 1], padding='same') # 最大池化,x,y步進值均為2 def max_pool_2x2(x): # stride [1, x_movement, y_movement, 1] return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='same') # define placeholder for inputs to network xs = tf.placeholder(tf.float32, [none, 10304])/255. # 112*92 ys = tf.placeholder(tf.float32, [none, peoplenum]) # 42個輸出 keep_prob = tf.placeholder(tf.float32) x_image = tf.reshape(xs, [-1, 112, 92, 1]) # print(x_image.shape) # [n_samples, 112,92,1] # 第一層卷積層 w_conv1 = weight_variable([5,5, 1,32]) # patch 5x5, in size 1, out size 32 b_conv1 = bias_variable([32]) h_conv1 = tf.nn.relu(conv2d(x_image, w_conv1) + b_conv1) # output size 112x92x32 h_pool1 = max_pool_2x2(h_conv1) # output size 56x46x64 # 第二層卷積層 w_conv2 = weight_variable([5,5, 32, 64]) # patch 5x5, in size 32, out size 64 b_conv2 = bias_variable([64]) h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2) # output size 56x46x64 h_pool2 = max_pool_2x2(h_conv2) # output size 28x23x64 # 第一層神經網絡全連接層 w_fc1 = weight_variable([28*23*64, 1024]) b_fc1 = bias_variable([1024]) # [n_samples, 28, 23, 64] ->> [n_samples, 28*23*64] h_pool2_flat = tf.reshape(h_pool2, [-1, 28*23*64]) h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, w_fc1) + b_fc1) h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob) # 第二層神經網絡全連接層 w_fc2 = weight_variable([1024, peoplenum]) b_fc2 = bias_variable([peoplenum]) prediction = tf.nn.softmax((tf.matmul(h_fc1_drop, w_fc2) + b_fc2)) # 交叉熵損失函數 cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits = tf.matmul(h_fc1_drop, w_fc2)+b_fc2, labels=ys)) regularizers = tf.nn.l2_loss(w_fc1) + tf.nn.l2_loss(b_fc1) +tf.nn.l2_loss(w_fc2) + tf.nn.l2_loss(b_fc2) # 將正則項加入損失函數 cost += 5e-4 * regularizers # 優化器優化誤差值 train_step = tf.train.adamoptimizer(1e-4).minimize(cost) sess = tf.session() init = tf.global_variables_initializer() saver = tf.train.saver() sess.run(init) # 訓練1000次,每50次輸出測試集測試結果 for i in range(1000): sess.run(train_step, feed_dict={xs: train_face, ys: train_face_num, keep_prob: 0.5}) if i % 50 == 0: print(sess.run(prediction[0],feed_dict= {xs: test_face,ys: test_face_num,keep_prob: 1})) print(compute_accuracy(test_face,test_face_num)) # 保存訓練數據 save_path = saver.save(sess,'my_data/save_net.ckpt') |

5.用神經卷積網絡測試數據

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

|

# -*- coding: utf-8 -*- # 兩層神經卷積網絡加兩層全連接神經網絡 from numpy import *import cv2 import tensorflow as tf # 神經網絡最終輸出個數 peoplenum = 42 # 2維=>1維 def img2vector1(img): row,col = img.shape vector1 = zeros((1,row*col),float32) vector1 = reshape(img,(1,row*col)) return vector1 # 神經元權重 def weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1) return tf.variable(initial) # 神經元偏置 def bias_variable(shape): initial = tf.constant(0.1, shape=shape) return tf.variable(initial) # 卷積 def conv2d(x, w): # stride [1, x_movement, y_movement, 1] # must have strides[0] = strides[3] = 1 return tf.nn.conv2d(x, w, strides=[1, 1, 1, 1], padding='same') # 最大池化,x,y步進值均為2 def max_pool_2x2(x): # stride [1, x_movement, y_movement, 1] return tf.nn.max_pool(x, ksize=[1,2,2,1], strides=[1,2,2,1], padding='same') # define placeholder for inputs to network xs = tf.placeholder(tf.float32, [none, 10304])/255. # 112*92 ys = tf.placeholder(tf.float32, [none, peoplenum]) # 42個輸出 keep_prob = tf.placeholder(tf.float32) x_image = tf.reshape(xs, [-1, 112, 92, 1]) # print(x_image.shape) # [n_samples, 112,92,1] # 第一層卷積層 w_conv1 = weight_variable([5,5, 1,32]) # patch 5x5, in size 1, out size 32 b_conv1 = bias_variable([32]) h_conv1 = tf.nn.relu(conv2d(x_image, w_conv1) + b_conv1) # output size 112x92x32 h_pool1 = max_pool_2x2(h_conv1) # output size 56x46x64 # 第二層卷積層 w_conv2 = weight_variable([5,5, 32, 64]) # patch 5x5, in size 32, out size 64 b_conv2 = bias_variable([64]) h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2) # output size 56x46x64 h_pool2 = max_pool_2x2(h_conv2) # output size 28x23x64 # 第一層神經網絡全連接層 w_fc1 = weight_variable([28*23*64, 1024]) b_fc1 = bias_variable([1024]) # [n_samples, 28, 23, 64] ->> [n_samples, 28*23*64] h_pool2_flat = tf.reshape(h_pool2, [-1, 28*23*64]) h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, w_fc1) + b_fc1) h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob) # 第二層神經網絡全連接層 w_fc2 = weight_variable([1024, peoplenum]) b_fc2 = bias_variable([peoplenum]) prediction = tf.nn.softmax((tf.matmul(h_fc1_drop, w_fc2) + b_fc2)) sess = tf.session() init = tf.global_variables_initializer() # 下載訓練數據 saver = tf.train.saver() saver.restore(sess,'my_data/save_net.ckpt') # 返回簽到人名 def find_people(people_num): if people_num == 41: return '任童霖' elif people_num == 42: return 'lzt' else: return 'another people' def test(path): # 獲取處理后人臉 img = cv2.imread(path,0)/255 test_face = img2vector1(img) print('true_test') # 計算輸出比重最大的人及其所占比重 prediction1 = sess.run(prediction,feed_dict={xs:test_face,keep_prob:1}) prediction1 = prediction1[0].tolist() people_num = prediction1.index(max(prediction1))+1 result = max(prediction1)/sum(prediction1) print(result,find_people(people_num)) # 神經網絡輸出最大比重大于0.5則匹配成功 if result > 0.50: # 保存簽到數據 qiandaobiao = load('save.npy') qiandaobiao[people_num-1] = 1 save('save.npy',qiandaobiao) # 返回 人名+簽到成功 print(find_people(people_num) + '已簽到') result = find_people(people_num) + ' 簽到成功' else: result = '簽到失敗' return result |

以上就是本文的全部內容,希望對大家的學習有所幫助,也希望大家多多支持服務器之家。

原文鏈接:https://blog.csdn.net/Lxingmo/article/details/76146646