jb51上面的資源還比較全,就準備用python來實現自動采集信息,與下載啦。

Python具有豐富和強大的庫,使用urllib,re等就可以輕松開發出一個網絡信息采集器!

下面,是我寫的一個實例腳本,用來采集某技術網站的特定欄目的所有電子書資源,并下載到本地保存!

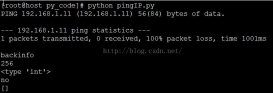

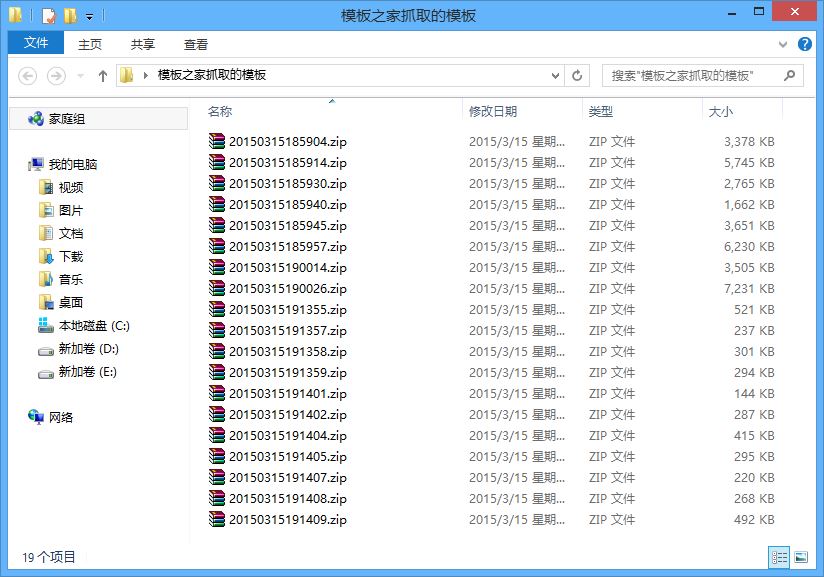

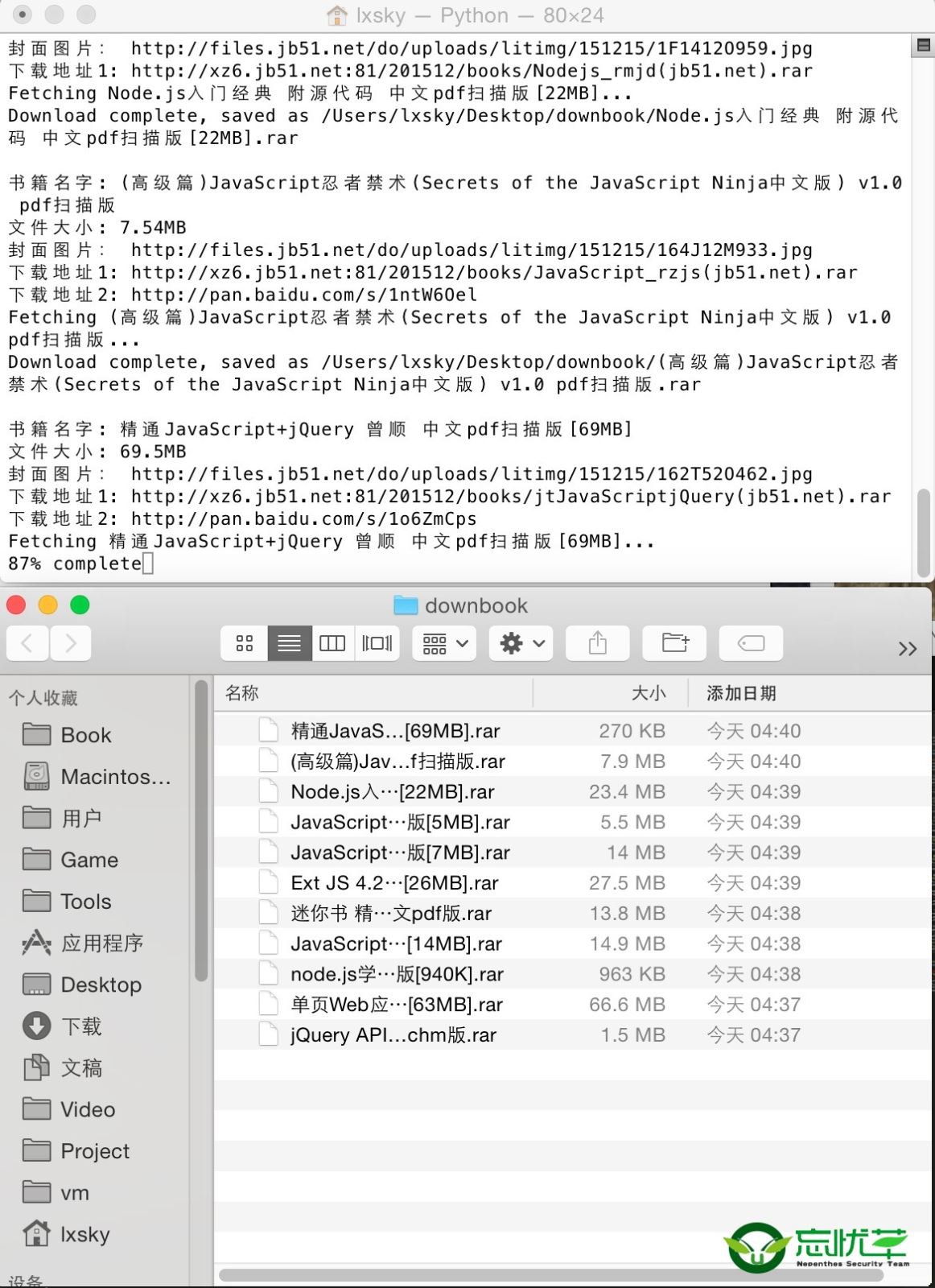

軟件運行截圖如下:

在腳本運行時期,不但會打印出信息到shell窗口,還會保存日志到txt文件,記錄采集到的頁面地址,書籍的名稱,大小,服務器本地下載地址以及百度網盤的下載地址!

實例采集并下載服務器之家的python欄目電子書資源:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

|

# -*- coding:utf-8 -*-import reimport urllib2import urllibimport sysimport osreload(sys)sys.setdefaultencoding('utf-8')def getHtml(url): request = urllib2.Request(url) page = urllib2.urlopen(request) htmlcontent = page.read() #解決中文亂碼問題 htmlcontent = htmlcontent.decode('gbk', 'ignore').encode("utf8",'ignore') return htmlcontentdef report(count, blockSize, totalSize): percent = int(count*blockSize*100/totalSize) sys.stdout.write("r%d%%" % percent + ' complete') sys.stdout.flush()def getBookInfo(url): htmlcontent = getHtml(url); #print "htmlcontent=",htmlcontent; # you should see the ouput html #<h1 class="h1user">crifan</h1> regex_title = '<h1s+?itemprop="name">(?P<title>.+?)</h1>'; title = re.search(regex_title, htmlcontent); if(title): title = title.group("title"); print "書籍名字:",title; file_object.write('書籍名字:'+title+'r'); #<li>書籍大小:<span itemprop="fileSize">27.2MB</span></li> filesize = re.search('<spans+?itemprop="fileSize">(?P<filesize>.+?)</span>', htmlcontent); if(filesize): filesize = filesize.group("filesize"); print "文件大小:",filesize; file_object.write('文件大小:'+filesize+'r'); #<div class="picthumb"><a href="//img.zzvips.com/do/uploads/litimg/151210/1A9262GO2.jpg" target="_blank" bookimg = re.search('<divs+?class="picthumb"><a href="(?P<bookimg>.+?)" rel="external nofollow" target="_blank"', htmlcontent); if(bookimg): bookimg = bookimg.group("bookimg"); print "封面圖片:",bookimg; file_object.write('封面圖片:'+bookimg+'r'); #<li><a href="http://xz6.zzvips.com:81/201512/books/JavaWeb100(jb51.net).rar" target="_blank">酷云中國電信下載</a></li> downurl1 = re.search('<li><a href="(?P<downurl1>.+?)" rel="external nofollow" target="_blank">酷云中國電信下載</a></li>', htmlcontent); if(downurl1): downurl1 = downurl1.group("downurl1"); print "下載地址1:",downurl1; file_object.write('下載地址1:'+downurl1+'r'); sys.stdout.write('rFetching ' + title + '...n') title = title.replace(' ', ''); title = title.replace('/', ''); saveFile = '/Users/superl/Desktop/pythonbook/'+title+'.rar'; if os.path.exists(saveFile): print "該文件已經下載了!"; else: urllib.urlretrieve(downurl1, saveFile, reporthook=report); sys.stdout.write("rDownload complete, saved as %s" % (saveFile) + 'nn') sys.stdout.flush() file_object.write('文件下載成功!r'); else: print "下載地址1不存在"; file_error.write(url+'r'); file_error.write(title+"下載地址1不存在!文件沒有自動下載!r"); file_error.write('r'); #<li><a href="http://pan.baidu.com/s/1pKfVNwJ" rel="external nofollow" target="_blank">百度網盤下載2</a></li> downurl2 = re.search('</a></li><li><a href="(?P<downurl2>.+?)" rel="external nofollow" target="_blank">百度網盤下載2</a></li>', htmlcontent); if(downurl2): downurl2 = downurl2.group("downurl2"); print "下載地址2:",downurl2; file_object.write('下載地址2:'+downurl2+'r'); else: #file_error.write(url+'r'); print "下載地址2不存在"; file_error.write(title+"下載地址2不存在r"); file_error.write('r'); file_object.write('r'); print "n";def getBooksUrl(url): htmlcontent = getHtml(url); #<ul class="cur-cat-list"><a href="/books/438381.html" rel="external nofollow" class="tit"</ul></div><!--end #content --> urls = re.findall('<a href="(?P<urls>.+?)" rel="external nofollow" class="tit"', htmlcontent); for url in urls: url = "//www.ythuaji.com.cn"+url; print url+"n"; file_object.write(url+'r'); getBookInfo(url) #print "url->", urlif __name__=="__main__": file_object = open('/Users/superl/Desktop/python.txt','w+'); file_error = open('/Users/superl/Desktop/pythonerror.txt','w+'); pagenum = 3; for pagevalue in range(1,pagenum+1): listurl = "//www.ythuaji.com.cn/ books/list476_%d.html"%pagevalue; print listurl; file_object.write(listurl+'r'); getBooksUrl(listurl); file_object.close(); file_error.close(); |

注意,上面代碼部分地方的url被我換了。

總結

以上所述是小編給大家介紹的python采集jb51電子書資源并自動下載到本地實例腳本,希望對大家有所幫助,如果大家有任何疑問請給我留言,小編會及時回復大家的。在此也非常感謝大家對服務器之家網站的支持!

原文鏈接:http://www.topjishu.net/article/123.html