導(dǎo)讀:如何使用scrapy框架實(shí)現(xiàn)爬蟲(chóng)的4步曲?什么是CrawSpider模板?如何設(shè)置下載中間件?如何實(shí)現(xiàn)Scrapyd遠(yuǎn)程部署和監(jiān)控?想要了解更多,下面讓我們來(lái)看一下如何具體實(shí)現(xiàn)吧!

Scrapy安裝(mac)

|

1

|

pip install scrapy |

注意:不要使用commandlinetools自帶的python進(jìn)行安裝,不然可能報(bào)架構(gòu)錯(cuò)誤;用brew下載的python進(jìn)行安裝。

Scrapy實(shí)現(xiàn)爬蟲(chóng)

新建爬蟲(chóng)

scrapy startproject demoSpider,demoSpider為項(xiàng)目名。

確定目標(biāo)

編寫(xiě)items.py,如添加目標(biāo)字段:person = scrapy.Field()

制作爬蟲(chóng)

scrapy genspider demo "baidu.com",創(chuàng)建demo爬蟲(chóng)文件,指定爬取域。

修改demo.py里的start_urls的地址為自己想爬取的地址如:https://www.cnblogs.com/teark/

隨意修改parse()方法,如保存所爬取的頁(yè)面,可以這樣:

|

1

2

3

|

def parse(self, response): with open("teark.html", "w") as f: f.write(response.text) |

運(yùn)行爬蟲(chóng),看看效果:scrapy crawl demo

有了保存的頁(yè)面后(可注釋掉或刪掉保存頁(yè)面的代碼),根據(jù)頁(yè)面結(jié)構(gòu)提取所需數(shù)據(jù),一般用xpath表達(dá)式,如:

|

1

2

3

4

5

6

7

8

|

def parse(self, response): for _ in response.xpath("//div[@class='teark_article']"): item = ItcastItem() title = each.xpath("h3/text()").extract() content = each.xpath("p/text()").extract() item['title'] = title[0] item['content'] = content[0] yield item |

保存數(shù)據(jù):scrapy crawl demo -o demo.json(以下格式都行:jsonl,jsonl,csv,xml)

注:該過(guò)程在取值中經(jīng)常需要頁(yè)面調(diào)試,使用scrapy shell(最好先安裝ipython,有語(yǔ)法提示),調(diào)試好了再放到代碼里,如:

|

1

2

3

|

scrapy shell "https://www.cnblogs.com/teark/"response.xpath('//*[@class="even"]')print site[0].xpath('./td[2]/text()').extract()[0] |

處理內(nèi)容

pipline常用來(lái)存儲(chǔ)內(nèi)容,pipline.py中必須實(shí)現(xiàn)process_item()方法,該方法必須返回Item對(duì)象,如:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

import jsonclass ItcastJsonPipeline(object): def __init__(self): self.file = open('demo.json', 'wb') def process_item(self, item, spider): content = json.dumps(dict(item), ensure_ascii=False) + "\n" self.file.write(content) return item def close_spider(self, spider): self.file.close() |

在settings.py中添加ITEM_PIPELINES配置,如:

|

1

2

3

|

ITEM_PIPELINES = { "demoSpider.pipelines.DemoJsonPipeline":300} |

重新啟動(dòng)爬蟲(chóng):scrapy crawl demo,看看當(dāng)前目錄是否生成demo.json

CrawlSpiders

CrawlSpider是spider的派生類(lèi),為了從爬取的網(wǎng)頁(yè)中獲取link并繼續(xù)爬取。

快速創(chuàng)建 CrawlSpider模板:scrapy genspider -t crawl baidu baidu.com

Rule類(lèi)制定了爬取規(guī)則;LinkExtractors類(lèi)為了提取鏈接,如:

|

1

2

3

4

5

6

7

|

scrapy shell "http://teark.com/article.php?&start=0#a"from scrapy.linkextractors import LinkExtractor# 注意轉(zhuǎn)義字符&page_lx = LinkExtractor(allow=('comment.php?\&start=\d+'))page_lx.extract_links(response) |

測(cè)試完后就知道了allow和rules了,修改spider代碼:

|

1

2

3

4

5

6

7

|

#提取匹配 'http://teark.com/article.php?&start=\d+'的鏈接page_lx = LinkExtractor(allow = ('start=\d+'))rules = [ #提取匹配,并使用spider的parse方法進(jìn)行分析;并跟進(jìn)鏈接(沒(méi)有callback意味著follow默認(rèn)為T(mén)rue) Rule(page_lx, callback = 'parseContent', follow = True)] |

注:callback 千萬(wàn)不能寫(xiě) 'parse'

Logging

添加日志功能:settings.py中添加如下:

|

1

2

3

4

|

LOG_FILE = "DemoSpider.log"# 還有CRITICAL, ERROR, WARNING DEBUG等級(jí)別LOG_LEVEL = "INFO" |

FormRequest

該方法用來(lái)發(fā)送POST請(qǐng)求,在spider.py中如下:

|

1

2

3

4

5

6

7

|

def start_requests(self): url = 'http://www.renren.com/PLogin.do' yield scrapy.FormRequest( url = url, formdata = {"email" : "teark@9133***34.com", "password" : "**teark**"}, callback = self.parse_page ) |

模擬登陸:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

import scrapyclass LoginSpider(scrapy.Spider): name = 'demo.com' start_urls = ['http://www.demo.com/users/login.php'] def parse(self, response): return scrapy.FormRequest.from_response( response, formdata={'username': 'teark', 'password': '***'}, callback=self.after_login ) def after_login(self, response): # 檢查登陸成功還是失敗 if "authentication failed" in response.body: self.log("Login failed", level=log.ERROR) return |

Downloader Middlewares

防止爬蟲(chóng)被反策略

設(shè)置隨機(jī)User-Agent

禁用Cookies,可以通過(guò)COOKIES_ENABLED 控制 CookiesMiddleware 開(kāi)啟或關(guān)閉

設(shè)置延遲下載降低頻率

使用谷歌/百度等搜索引擎服務(wù)器頁(yè)面緩存獲取頁(yè)面數(shù)據(jù)

使用IP地址池——VPN和代理IP

使用cralera(專(zhuān)用于爬蟲(chóng)的代理組件),配置中間件后請(qǐng)求都是通過(guò)crawlera發(fā)出的

設(shè)置下載中間件——Downloader Middlewares

鍵為中間件類(lèi)的路徑,值為其中間件的順序(order)

|

1

2

3

|

DOWNLOADER_MIDDLEWARES = { 'mySpider.middlewares.MyDownloaderMiddleware': 543,} |

當(dāng)請(qǐng)求通過(guò)下載中間件時(shí),process_request方法被調(diào)用;當(dāng)下載中間件完成請(qǐng)求后傳遞響應(yīng)給引擎時(shí)process_response方法被調(diào)用。

在settings.py同級(jí)目錄下創(chuàng)建middlewares.py文件,如:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

import randomimport base64from settings import USER_AGENTSfrom settings import PROXIESclass RandomUserAgent(object): def process_request(self, request, spider): useragent = random.choice(USER_AGENTS) request.headers.setdefault("User-Agent", useragent)class RandomProxy(object): def process_request(self, request, spider): proxy = random.choice(PROXIES) if proxy['user_passwd'] is None: request.meta['proxy'] = "http://" + proxy['ip_port'] else: base64_userpasswd = base64.b64encode(proxy['user_passwd']) request.headers['Proxy-Authorization'] = 'Basic ' + base64_userpasswd request.meta['proxy'] = "http://" + proxy['ip_port'] |

與代理服務(wù)器連接需要使用base64編碼,如果有需要身份驗(yàn)證的話(huà)還需要加上授權(quán)信息,

修改settings.py配置USER_AGENTS和PROXIES

免費(fèi)代理IP上網(wǎng)搜,或購(gòu)買(mǎi)可用的私密代理IP

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

USER_AGENTS = ["Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1", "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0", "Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5" ]PROXIES = [ {'ip_port': '111.8.60.9:8123', 'user_passwd': 'user1:pass1'}, {'ip_port': '101.71.27.120:80', 'user_passwd': 'user2:pass2'}, {'ip_port': '122.96.59.104:80', 'user_passwd': 'user3:pass3'}, ]# 禁用cookiesCOOKIES_ENABLED = False# 設(shè)置下載延遲DOWNLOAD_DELAY = 3# 添加自己編寫(xiě)的下載中間件DOWNLOADER_MIDDLEWARES = { #'mySpider.middlewares.MyCustomDownloaderMiddleware': 543, 'mySpider.middlewares.RandomUserAgent': 1, 'mySpider.middlewares.ProxyMiddleware': 100} |

Scrapyd - 爬蟲(chóng)的遠(yuǎn)程部署和監(jiān)控

安裝Scrapyd

|

1

2

|

sudo pip install scrapydsudo pip install scrapyd-client |

修改scrapyd的配置文件

啟用scrapyd配置,在deploy后面指定當(dāng)前項(xiàng)目的Scrapyd配置名,配置Scrapyd服務(wù)的ip和port,以及當(dāng)前項(xiàng)目的項(xiàng)目名,如:

|

1

|

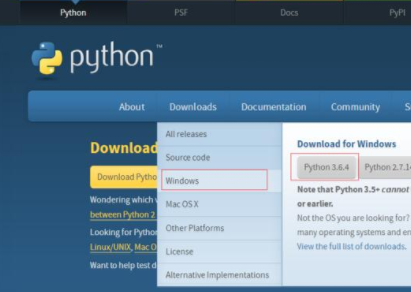

sudo vi /usr/local/lib/python3.8.6/site-packages/scrapyd/default_scrapyd.conf |

|

1

2

3

4

5

6

7

8

9

10

11

12

|

# scrapy項(xiàng)目的settings文件位置,不用改動(dòng)[settings]default = Demo.settings# Scrapyd_Tencent是配置名[deploy:Scrapyd_Demo] # 若是本機(jī)Scrapyd服務(wù)部署,則使用localhost即可否則使用服務(wù)主機(jī)的ipurl = http://localhost:6800/# 需要部署和監(jiān)控的Scrapy項(xiàng)目名project = Demo |

通過(guò)Scrapyd客戶(hù)端工具掛載項(xiàng)目

指令:scrapyd-deploy Scrapyd_Demo -p Demo

scrapyd-deploy 是客戶(hù)端命令,參數(shù)是 Scrapyd配置名,以及 -p 指定項(xiàng)目名

遠(yuǎn)程部署和停止爬蟲(chóng)

部署:curl http://localhost:6800/schedule.json -d project=Demo -d spider=demo

停止:curl http://localhost:6800/cancel.json -d project=Demo -d job=iundsw....

注:爬蟲(chóng)啟動(dòng)成功后,會(huì)生成job值,停止爬蟲(chóng)時(shí)需要通過(guò)job值停止。

以上就是python Scrapy爬蟲(chóng)框架的使用的詳細(xì)內(nèi)容,更多關(guān)于python Scrapy爬蟲(chóng)框架的資料請(qǐng)關(guān)注服務(wù)器之家其它相關(guān)文章!

原文鏈接:https://www.cnblogs.com/teark/p/14290334.html